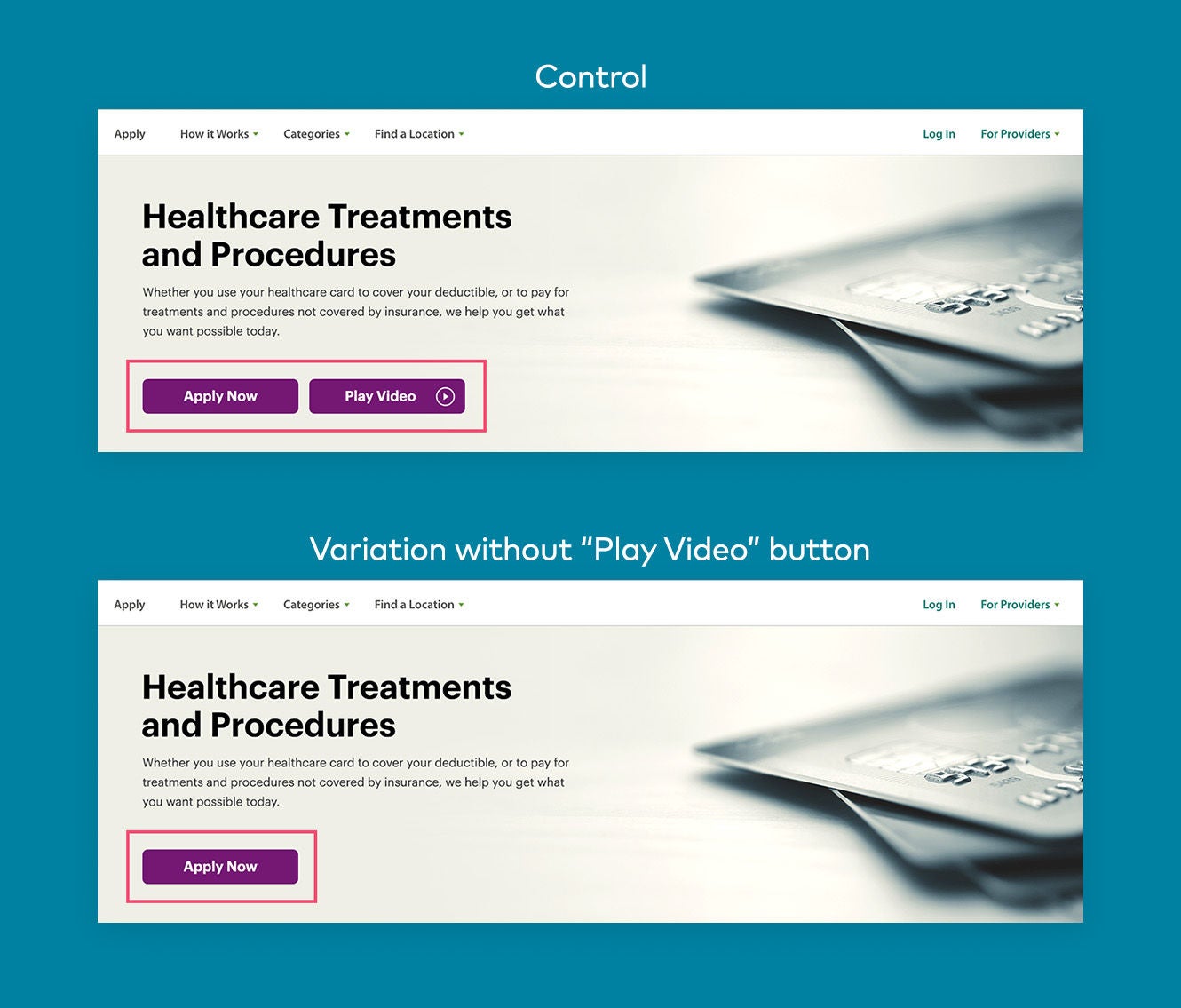

The premise of A/B testing is simple:

Compare two (or more) different versions of something to see which performs better and then deploy the winner to all users for the most optimal overall experience.

The practice of A/B testing and CRO teams has thus been to invest significantly in launching all sorts of experiments to improve different areas and experiences across the site, native app, email, or any other digital channel and then continuously optimize them to drive incremental uplift in conversions and specific KPIs as time goes on.

However, unless a company generates tons of traffic and has a huge digital landscape from which to experiment, there may come a point of diminishing returns where the output of experimentation (no matter how many tests or how big and sophisticated an experiment may be) reaches a maximum yield in terms of the input from these teams.

This largely has to do with the fact that the classic approach to A/B testing offers a binary view of visitor preferences and often fails to capture the full range of factors and behavior that define who they are as individuals.

Moreover, A/B tests yield generalized results based on a segment’s majority preferences. And while a brand may find a particular experience to yield more revenue on average, deploying it to all users would be a disservice to a significant portion of consumers with different preferences.

Let me illustrate with a few examples:

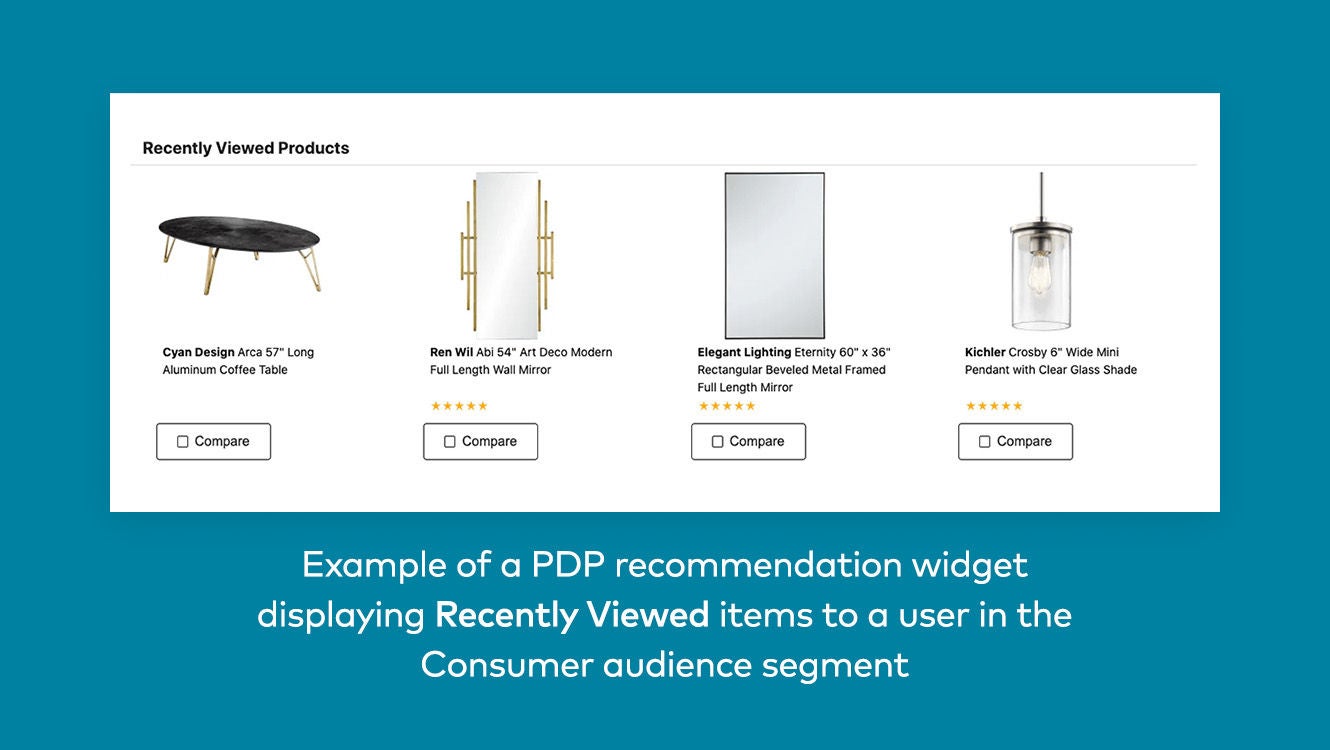

If the net worth of both myself and Warren Buffet was $117.3 billion USD on average, would it make sense to recommend the same products to us?

Probably not.

Or how about if a retailer that serves both men’s and women’s products decides to run a classic A/B test on their homepage to identify the top-performing hero banner variation, but since 70% of their audience is women, the women’s variation outperforms the men’s.

This test would suggest that the women’s hero banner be applied to the entire population, but it surely wouldn’t be the right decision.

To put it simply:

- Averages are often misleading when used to compare different user groups

- The best-performing variation changes for each customer segment and user

- Results can also be influenced by contextual factors like geo, weather, and more

This is not to say, of course, that there isn’t a time and place for leveraging more generalized results. For instance, if you were testing a new website or app design, it would make sense to aim for one consistent UI that worked best on average vs. dozens, hundreds, or even thousands of UI variations for different users.

However, the days of faithfully taking a “winner-take-all” approach to the layout of a page, messaging, content, recommendations, offers, and other creative elements are over – and that’s okay because it means no longer will money be left on the table from the missed personalization opportunities associated with not delivering the best variation to each individual user.