Online scammers are already using LLMs to write more convincing and targeted phishing emails and do it at a much bigger scale than they ever could have without AI tools.

Gone are the days of poorly written generic emails that even the least tech-savvy person could spot as scams. Non-native English-speaking scammers are now pros at polishing their messages with LLMs.

And even those scammers posing as lonely soldiers stationed overseas, pushing a business opportunity that looks too good to be true (because it actually is), are sprinkling in personal details mined by AI to make those messages much more believable.

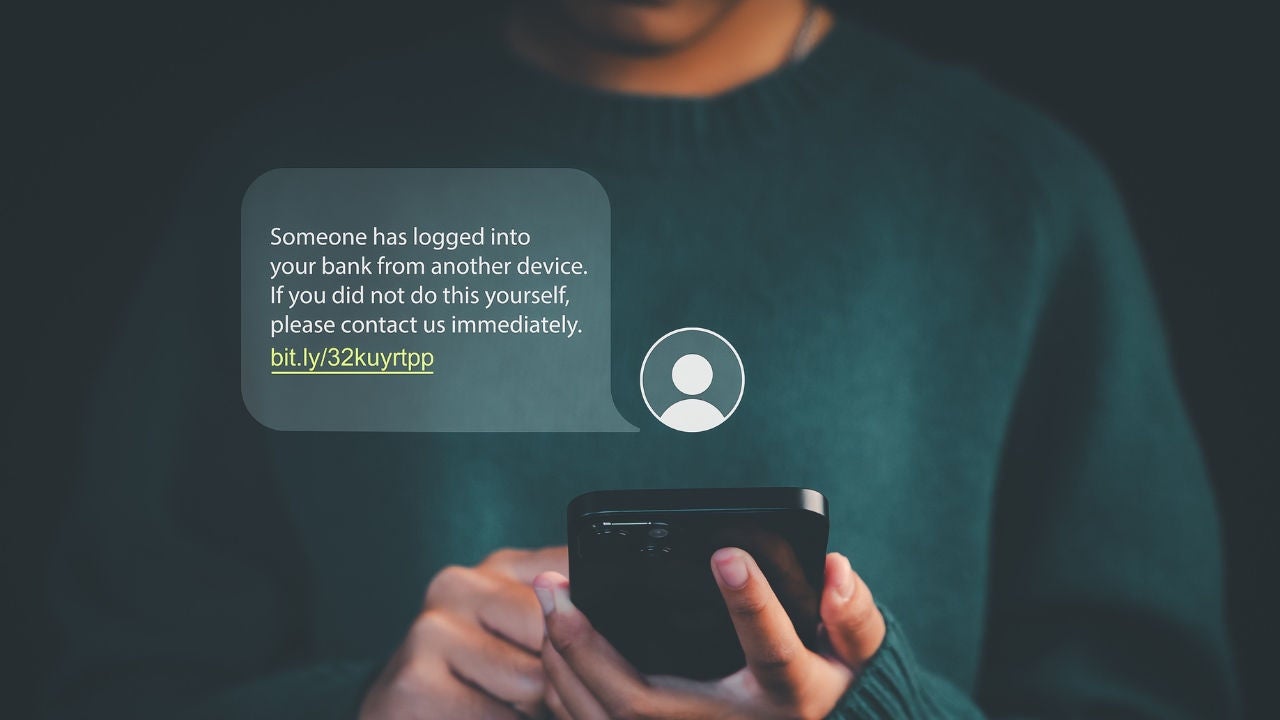

They’ve also moved beyond email, delivering their now well-crafted communications to social media, texts and even phone calls.

At the same time, there’s been a rise in scam messages containing audio or video deepfakes. Last year, a worker in Hong Kong was duped into paying out $25 million to fraudsters after signing on to what he thought was a video call with officials at his multinational company, including the chief financial officer. But it turned out that the others on the call were live-video deepfake recreations of those people.

Consumers also have been targeted in audio deepfake scams. That’s where cybercriminals use AI to deepfake the voices of people, typically younger, then use the mimicked voice to call family members, saying that they’ve been kidnapped or are in jail, to extort money out of them.

All of that has companies and consumers understandably scared. A recent study conducted for Mastercard polled about 13,000 consumers around the world, including about 1,000 in the U.S., and found AI-generated fake content is the No. 1 scam-related future concern for consumers. But only 13% of those polled said they are very confident in their ability to identify AI-generated threats or scams if they are targeted by them.

The vast majority of those polled specifically cited concerns about more sophisticated attacks from AI systems being hacked and turned malicious, automated large-scale cyberattacks, and more convincing phishing emails created by AI.

And worried consumers can mean big problems for the companies that serve them. If consumers can’t trust that they’re dealing with a legitimate company or that their personal information is safe, they could choose to take their business elsewhere.